Each Monday, we’re going to be bringing you cutting-edge research reviews to not only make your health and productivity crazy simple, but also, constantly up-to-date.

But today, in this special edition, we want to lay out plain and simple how to see through a lot of the tricks used not just by popular news outlets, but even sometimes the research publications themselves.

That way, when we give you health-related science news, you won’t have to take our word for it, because you’ll be able to see whether the studies we cite really support the claims we make.

Of course, we’ll always give you the best, most honest information we have… But the point is that you shouldn’t have to trust us! So, buckle in for today’s special edition, and never have to blindly believe sci-hub (or Snopes!) again.

The above now-famous Tumblr post that became a meme is a popular and obvious example of how statistics can be misleading, either by error or by deliberate spin.

But what sort of mistakes and misrepresentations are we most likely to find in real research?

Spin Bias

Perhaps most common in popular media reporting of science, the Spin Bias hinges on the fact that most people perceive numbers in a very “fuzzy logic” sort of way. Do you?

Try this:

A million seconds is 11.5 days

A billion seconds is not weeks, but 13.2 months!

…just kidding, it’s actually nearly thirty-two years.

Did the months figure seem reasonable to you, though? If so, this is the same kind of “human brains don’t do large numbers” problem that occurs when looking at statistics.

Let's have a look at reporting on statistically unlikely side effects for vaccines, as an example:

“966 people in the US died after receiving this vaccine!” (So many! So risky!)

“Fewer than 3 people per million died after receiving this vaccine!” (Hmm, I wonder if it is worth it?)

“Half of unvaccinated people with this disease die of it” (Oh)

Here’s a visual explanation using the example of COVID and COVID vaccines, for the visual learners:

See how what we understand intuitively about numbers, and what the numbers actually mean, can sometimes be quite different!

How to check for this: ask yourself “is what’s being described as very common really very common?”. To keep with the spiders theme, there are many (usually outright made-up) stats thrown around on social media about how near the nearest spider is at any given time. Apply this kind of thinking to medical conditions.. If something affects only 1% of the population (So few! What a tiny number!), how far would you have to go to find someone with that condition? The end of your street, perhaps?

Selection/Sampling Bias

Diabetes disproportionately affects black people, but diabetes research disproportionately focuses on white people with diabetes. There are many possible reasons for this, the most obvious being systemic/institutional racism. For example, advertisements for clinical trial volunteer opportunities might appear more frequently amongst a convenient, nearby, mostly-white student body. The selection bias, therefore, made the study much less reliable.

Alternatively: a researcher is conducting a study on depression, and advertises for research subjects. He struggles to get a large enough sample size, because depressed people are less likely to respond, but eventually gets enough. Little does he know, even the most depressed of his subjects are relatively happy and healthy compared with the silent majority of depressed people who didn’t respond.

How to check for this: Does the “method” section of the scientific article describe how they took pains to make sure their sample was representative of the relevant population, and how did they decide what the relevant population was?

Publication Bias

Scientific publications will tend to prioritise statistical significance. Which seems great, right? We want statistically significant studies… don’t we?

We do, but: usually, in science, we consider something “statistically significant” when it hits the magical marker of p=0.05 (in other words, the probability of getting that result is 1/20, and the results are reliably coming back on the right side of that marker).

However, this can result in the clinic stopping testing once p=0.05 is reached, because they want to have their paper published. (“Yay, we’ve reached out magical marker and now our paper will be published”)

So, you can think of publication bias as the tendency for researchers to publish ‘positive’ results.

If it weren’t for publication bias, we would have a lot more studies that say “we tested this, and here are our results, which didn’t help answer our question at all”—which would be bad for the publication, but good for science, because data is data.

To put it in non-numerical terms: this is the same misrepresentation as the technically true phrase “when I misplace something, it’s always in the last place I look for it”—obviously it is, because that’s when you stop looking.

There’s not a good way to check for this, but be sure to check out sample sizes and see that they’re reassuringly large.

Reporting/Detection/Survivorship Bias

Similar to selection bias, these often overlap. Check this out:

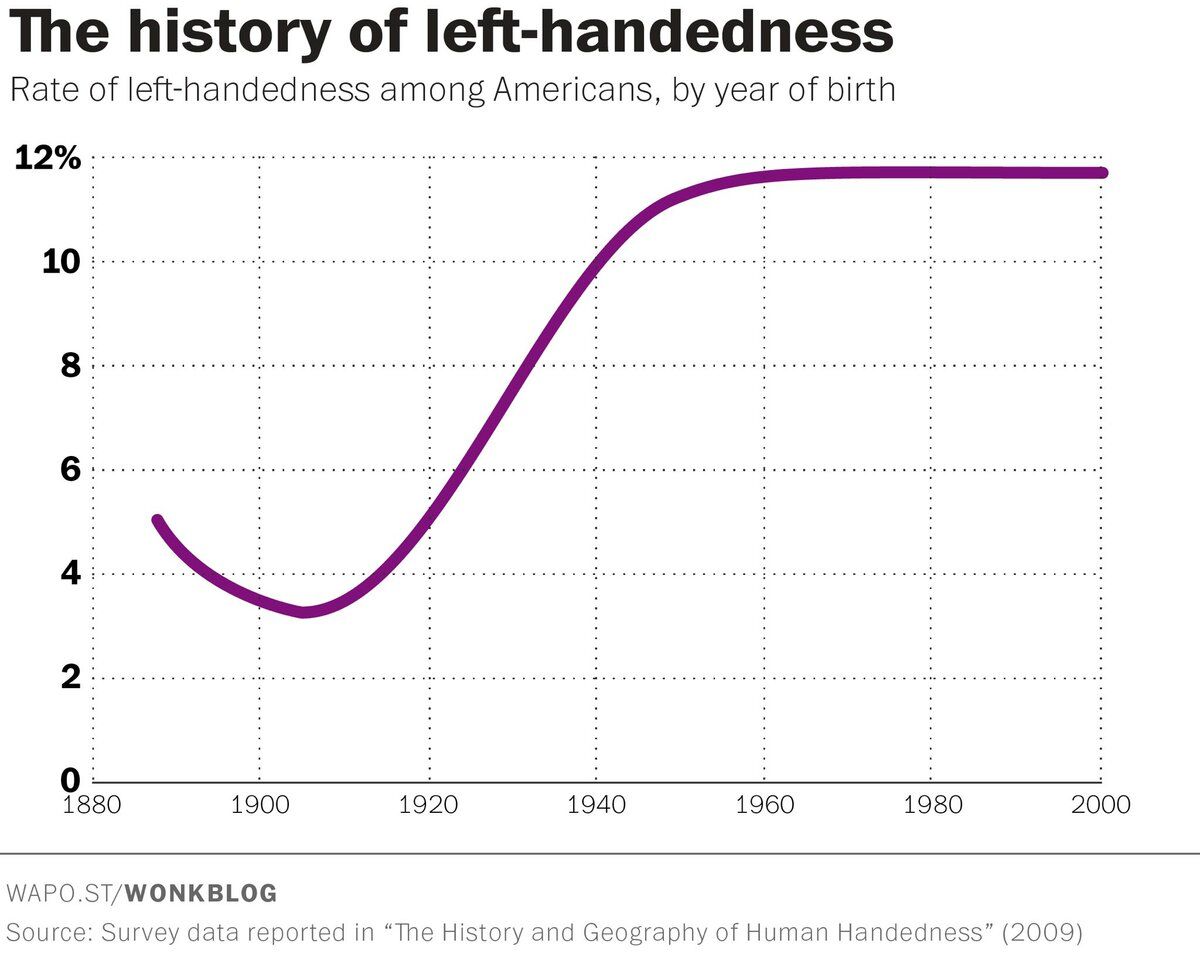

Why did left-handedness become so much more prevalent all of a sudden, and then plateau at 12%?

Simple, that’s when schools stopped forcing left-handed children to use their right hands instead.

In a similar fashion, countries have generally found that homosexuality became a lot more common once decriminalized. Of course the real incidence almost certainly did not change—it just became more visible to research.

So, these biases are caused when the method of data collection and/or measurement leads to a systematic error in results.

How to check for this: you’ll need to think this through logically, on a case by case basis. Is there a reason that we might not be seeing or hearing from a certain demographic?

And perhaps most common of all…

Confounding Bias

This is the bias that relates to the well-known idea “correlation ≠ causation”.

Everyone has heard the funny examples, such as “ice cream sales cause shark attacks” (in reality, both are more likely to happen in similar places and times; when many people are at the beach, for instance).

How can any research paper possibly screw this one up?

Often they don’t and it’s a case of Spin Bias (see above), but examples that are not so obviously wrong “by common sense” often fly under the radar:

“Horse-riding found to be the sport that most extends longevity”

Should we all take up horse-riding to increase our lifespans? Probably not; the reality is that people who can afford horses can probably afford better than average healthcare, and lead easier, less stressful lives overall. The fact that people with horses typically have wealthier lifestyles than those without, is the confounding variable here.

In short, when you look at the scientific research papers cited in the articles you read (you do look at the studies, yes?), watch out for these biases that found their way into the research, and you’ll be able to draw your own conclusions, with well-informed confidence, about what the study actually tells us.

Science shouldn’t be gatekept, and definitely shouldn’t be abused, so the more people who know about these things, the better!

One-Minute Book Review

Are you a cover-to-cover person, or a dip-in-and-out person?

Mortimer Adler and Charles van Doren have made a science out of getting the most from reading books.

They help you find what you’re looking for (Maybe you want to find a better understanding of PCOS… maybe you want to find the definition of “heuristics”... maybe you want to find a new business strategy… maybe you want to find a romantic escape… maybe you want to find a deeper appreciation of 19th century poetry, maybe you want to find… etc).

They then help you retain what you read, and make sure that you don’t miss a trick.

Whether you read books so often that optimizing this is of huge value for you, or so rarely that when you do, you want to make it count, this book could make a real difference to your reading experience forever after.

Wishing you a beautiful week ahead.

Until tomorrow,

The 10almonds team

DISCLAIMER: None of this is medical advice. This newsletter is strictly educational and is not medical advice or a solicitation to buy any supplements or medications, or to make any medical decisions. Always be careful. Always consult a professional. Additionally, we may earn a commission on some products/services that we link to; but they’re all items that we believe in :)